Navigating the AI-Driven Search Landscape: A Comprehensive Guide to Generative Engine Optimization (GEO)

I. Executive Summary

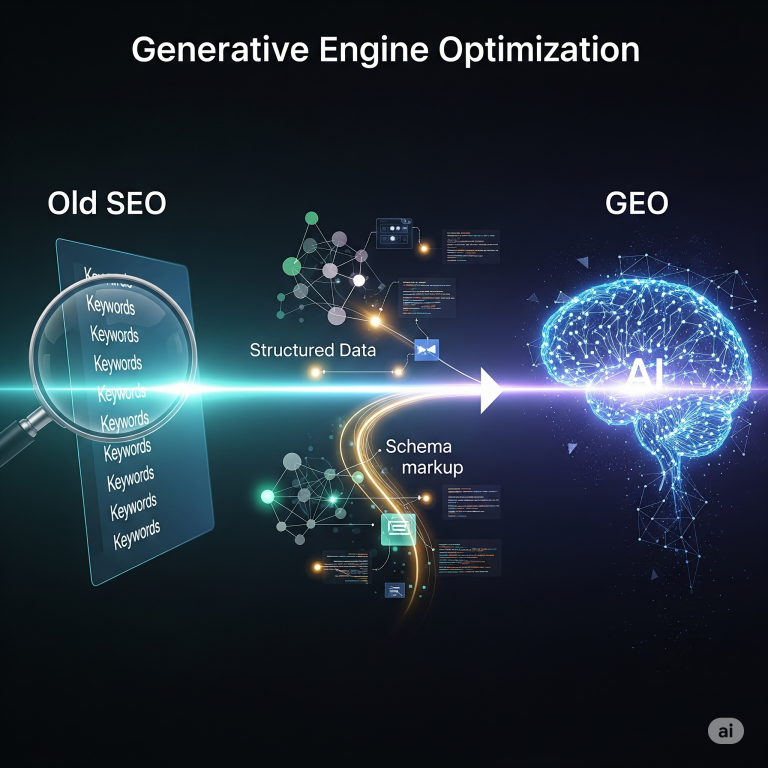

The digital marketing landscape is undergoing a profound transformation, driven by the rapid evolution of artificial intelligence in search. This report delves into Generative Engine Optimization (GEO), a strategic framework essential for achieving visibility and influence in this new AI-powered environment. It outlines the fundamental shift from traditional keyword-centric SEO to an intent-driven, conversational search paradigm, emphasizing the critical role of structured data, advanced content strategies, and evolving measurement frameworks.

A key observation is the fundamental shift in search from keyword-centricity to intent-driven, conversational AI interactions, which is reshaping how users discover and consume information. Structured data, specifically Schema Markup, is no longer considered optional; it has become a critical enabler for AI comprehension and content surfacing, extending beyond traditional rich results to serve as an indispensable prerequisite for success in Generative Engine Optimization. The imperative for content to be factually accurate, contextually rich, and designed for machine readability, rather than solely for human consumption, is paramount for achieving AI visibility and citation. Furthermore, success metrics are evolving from conventional clicks and rankings to encompass citation frequency, source attribution quality, and the depth of engagement within AI-generated responses, thereby necessitating the adoption of new measurement frameworks. Proactive engagement with ethical considerations, including mitigating AI hallucinations and algorithmic bias, alongside ensuring robust data privacy, is essential for building trust and fostering sustainable GEO success. The future trajectory of Large Language Models (LLMs) indicates a move towards more efficient, specialized, and multimodal AI, which necessitates continuous adaptation and skill development from marketing and content strategists.

II. The Paradigm Shift: From Traditional SEO to Generative Engine Optimization (GEO)

The Evolving Search Landscape: How AI is fundamentally reshaping user interactions and content discovery.

The traditional approach to Search Engine Optimization (SEO), which primarily focuses on achieving high rankings in search results, is rapidly being superseded by a new emphasis on relevance and direct answers within generative AI outputs. This marks a significant change in how digital content gains visibility and impacts user behavior. Generative AI is spearheading a monumental upgrade to the Google Search experience, enabling users to pose questions that are often more extensive, intricate, and conversational than those typically used in conventional search queries. This shift towards more natural language interactions is evident in the remarkable growth of generative AI and AI search, with search interest for “AI search” surging by an astounding 3,233% over the last five years, indicating widespread adoption and acceleration of these new search paradigms.

A significant development observed is the decoupling of content discovery from direct website traffic. Research indicates a critical shift where “search impressions are up,” yet “clicks are down,” suggesting that Google’s AI Mode era has fundamentally altered the relationship between content visibility and direct website engagement. This phenomenon signifies that users are increasingly obtaining answers directly from AI overviews or summaries, thereby reducing the necessity to click through to the original website. For content strategists, this necessitates a fundamental re-evaluation of how success is measured. While direct website traffic remains crucial for content designed to drive conversions, the primary objective for informational content is now shifting towards achieving prominence and influence within AI-generated responses. This requires optimizing content for direct answers and comprehensive summaries, even if it does not consistently result in immediate website clicks. The value proposition is transitioning from merely driving navigation to establishing brand presence and authority within the AI’s synthesized output. This evolution also correlates with an increase in “quality clicks”—those where users do not quickly navigate away—as AI overviews provide initial context, prompting users to click through for more in-depth exploration of topics.

Another profound transformation is the shift from simple keyword matching to comprehensive intent comprehension. Traditional keyword search primarily focuses on matching static terms, whereas Large Language Model (LLM)-powered conversational search is designed to interpret natural, context-rich questions. This evolution means that search is “no longer about matching a phrase to a page” but rather about understanding the user’s underlying intent—the “why” behind their query. Consequently, content creation must transcend basic keyword optimization. It needs to be designed to address the full spectrum of user intent, anticipating follow-up questions and providing comprehensive, nuanced answers. This demands a deeper understanding of the customer journey and a strategic approach to content architecture that covers entire topics rather than isolated keywords, aligning seamlessly with the principles of semantic SEO.

Defining Generative Engine Optimization (GEO): A strategic framework for visibility in AI-powered search.

Generative Engine Optimization (GEO) is fundamentally concerned with achieving relevance and visibility within the generative output of AI search engines, moving beyond the conventional focus on search engine rankings. It represents a strategic reorientation that surpasses traditional SEO, concentrating on ensuring that a brand’s content actively influences Large Language Models (LLMs) and appears prominently where users seek answers from AI. The implementation of schema markup is explicitly identified as an “entry ticket” to achieving success in Generative Engine Optimization, underscoring its foundational importance in this evolving landscape.

A critical strategic principle underscored by the current environment is the imperative for proactive infrastructure building. The sentiment expressed that implementing structured data now is “smarter than waiting till it happens and then having to react” highlights a crucial strategic imperative. While AI models are demonstrating increasing proficiency at extracting meaning from unstructured content, proactively structuring data positions a brand to adapt more effectively to future evolutions in AI search. This emphasizes that GEO is not merely a reactive adjustment to current trends but a proactive investment in future-proofing a brand’s digital presence. Organizations that strategically implement structured data and design content for AI comprehension today will secure a significant competitive advantage. This forward-thinking approach mitigates the risk of being marginalized as AI search capabilities mature and potentially rely even more heavily on explicitly structured inputs, making it a critical long-term infrastructure play rather than a short-term tactical response.

The Foundational Role of Large Language Models (LLMs): How LLMs process, understand, and synthesize information for search.

Large Language Models (LLMs) are sophisticated AI systems trained on extensive datasets of text, empowering them to comprehend and generate human-like language. These models are specifically engineered to interpret natural, context-rich questions and deliver responses in a conversational manner that closely mirrors human dialogue. LLMs possess an inherent capability to understand and interpret natural language with remarkable accuracy, discerning the subtleties of grammar, syntax, and semantics without requiring explicit instructions or pre-structured data.

A significant aspect of the AI search landscape is the synergistic relationship between Schema and Natural Language Processing (NLP). While LLMs are “incredibly good at extracting meaning and context from unstructured content” , the analysis also indicates that schema markup provides “clearer signals” and “eliminates ambiguity”. Furthermore, the process of parsing unstructured text via NLP is described as a “costly exercise” for search engines. This juxtaposition suggests that while AI possesses the capability to process unstructured data, structured data significantly enhances its efficiency and precision. Therefore, schema markup should not be viewed as a redundant effort given AI’s NLP capabilities, but rather as a powerful complement. It functions as a direct, unambiguous instruction set for AI, reducing the computational burden and potential for misinterpretation inherent in NLP. This implies that a balanced content strategy is crucial: one that creates high-quality, human-readable content with a natural flow, and then augments it with precise schema markup to ensure it is unequivocally machine-readable. This dual optimization maximizes the likelihood of content being accurately understood, synthesized, and cited by AI systems.

III. Structured Data: The Language of AI Search

Understanding Schema Markup: What it is and why it’s indispensable for AI.

Schema Markup is a standardized vocabulary of structured data specifically designed to help search engines and LLM-based AI tools gain a comprehensive understanding of the content present on a website. It is typically implemented using the JSON-LD format, embedded within the HTML of a page, and provides explicit context about the content, ranging from detailed product information and customer reviews to articles and frequently asked questions. At its core, schema markup significantly enhances efficiency by delivering structured information through key-value pairs, thereby eliminating ambiguity. This direct approach provides search engines with explicit answers, rather than compelling them to infer meaning through complex and resource-intensive Natural Language Processing (NLP).

A deeper examination reveals schema’s function as a “page-level knowledge graph.” The analysis highlights that schema assists search engines in comprehending the relationships between various entities on a webpage, effectively creating a “lightweight page-level knowledge graph”. This extends beyond merely labeling individual data points; it establishes interconnectedness among them. The implication is that effective schema implementation involves constructing a semantic web

within a brand’s own digital properties. By explicitly defining how entities relate to one another—for instance, an Article authored by a Person who is affiliated with an Organization—content creators furnish AI with a richer, more interconnected data model. This significantly enhances the AI’s capacity to synthesize information accurately and deliver comprehensive, contextually relevant answers, particularly for complex queries that necessitate drawing connections between disparate pieces of information. This structured relational data renders the AI’s interpretation far more robust and reliable.

Direct Impact on AI-Driven Results: How structured data enhances content interpretation and surfacing.

Schema markup is indispensable for AI engines to efficiently extract content into rich answers, concise summaries, or suggested actions within generative search results. Without its implementation, valuable content may remain “invisible” to AI-driven outputs. Its strategic deployment can significantly boost click-through rates by enabling the display of rich snippets and elevate overall visibility across various AI-powered features, including voice search, featured snippets, and Google’s AI Overviews. Schemas play a crucial role in organizing data into structured categories, which aids AI in recognizing patterns and providing essential context for generating accurate and consistent results. This structured framework empowers AI to make more informed decisions and predictions.

The analysis further indicates that schema serves as a direct signal of content reliability for AI. The more context provided through structured data, the more AI engines can trust and utilize the content. This implies a direct correlation between the clarity and structure afforded by schema and the AI’s assessment of content reliability. Beyond merely aiding comprehension, schema contributes to the perceived trustworthiness of content by AI systems. When information is presented in a clearly defined, consistent, and unambiguous structured format, it significantly reduces the AI’s “cognitive load” in validating the information. This increases the likelihood of the content being selected for inclusion in AI-generated responses, particularly in an era where AI hallucinations and misinformation are substantial concerns. Structured data thus provides a verifiable and reliable foundation for AI’s outputs, bolstering confidence in the information delivered.

Essential Schema Types for GEO: Deep dive into Article, FAQ, Product, Local Business, and How-To schema.

Several schema types are particularly valuable for optimizing content for generative AI visibility:

- Article Schema: This type informs search engines that the published content is a distinct article, making it eligible for prominent display in relevant search results.

- FAQ Schema: Implementing FAQ schema significantly increases the likelihood of frequently asked questions and their corresponding answers being directly extracted into AI-generated responses or featured answer boxes.

- Product Schema: This schema type is indispensable for e-commerce websites, as it enables the display of critical product data such as pricing, customer reviews, and availability directly within search results.

- Local Business Schema Markup: Crucial for both local SEO and GEO, this schema helps AI engines accurately identify a business’s physical location, specific offerings, and how to connect users to its services. It includes vital details like the business name, address, phone number, operating hours, and geo-coordinates.

- How-To Schema: Highly effective for step-by-step instructions, this schema makes content suitable for appearance in voice-activated or visual AI results.

The Schema.org vocabulary encompasses a wide array of types beyond these core examples, including CreativeWork (for books, movies, music, recipes), Event, Organization, Person, Place, Review, and Action. All of these can provide valuable context to AI systems, enriching their understanding of the content.

A notable observation is that specific schema types function as direct AI output triggers. The emphasis on types like FAQ and How-To for providing “quick SEO wins” and increasing the “odds of having FAQs pulled into AI-generated responses or featured boxes” suggests a direct, functional relationship between these schema types and how AI structures its answers. This indicates that content creators should strategically prioritize the implementation of schema types that directly align with the types of answers AI is designed to provide. For instance, if the objective is to appear in direct Q&A formats, FAQ schema is paramount. If the aim is to offer step-by-step guidance, How-To schema is critical. This targeted application of schema moves beyond general SEO best practices to a precise optimization for specific AI output formats, significantly boosting a brand’s visibility in the most prominent and impactful AI-driven search features.

Strategic Implementation Best Practices: Focusing on JSON-LD, validation, and advanced techniques like schema nesting and E-E-A-T alignment.

Effective implementation of schema markup involves a systematic approach:

- Identify Relevant Content Types: The process begins by determining the specific categories of content published on the website, such as blog posts, customer reviews, service pages, or product pages.

- Utilize Schema.org Vocabulary: Access Schema.org to select and copy the appropriate structured data format. JSON-LD is highly recommended as Google’s preferred format due to its ease of implementation and maintenance.

- Integrate into Website’s HTML: Embed the JSON-LD code within the <head> or <body> elements of the HTML page. JSON-LD’s non-interleaved nature with user-visible text simplifies the expression of nested data items. It can also be dynamically injected via JavaScript after the page loads.

- Test and Validate Markup: Utilize Google’s Rich Results Test (formerly Structured Data Testing Tool) during development to validate the structured data and preview how it might appear in Google Search.

- Monitor Performance: Continuously track performance using Google Search Console, observing clicks, impressions, and specifically how often the site appears in AI Overviews or summaries.

Beyond these foundational steps, several advanced techniques enhance schema’s effectiveness:

- Maintain Data Freshness: Ensure schema data remains current, especially for dynamic information like local business details.

- Incorporate Social Proof: Embed elements such as ratings and testimonials directly into schema markup to enhance credibility.

- Combine with Core Web Vitals: For optimal results, integrate schema with high-quality content and fast-loading pages, as these factors contribute to overall user experience and AI trust.

- Schema and E-E-A-T Alignment: Schema markup directly supports Google’s E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) guidelines by helping search engines establish identity, credibility, and context for content. This includes using

Organization schema to establish a business entity and its relationship to content, and Person schema to disclose author or reviewer details, linking their name, job title, and educational background to build credibility. The

sameAs property can link to authoritative external profiles (e.g., social media, Crunchbase, LinkedIn) to validate the entity’s online presence and credibility, while taxID/vatID can link to government-related sources for further validation. For local businesses,

LocalBusiness schema connects a digital identity with a tangible, real-world presence, fostering trust.

- Advanced Schema Nesting: Implement nesting of schema types to maximize the interconnection and relationships between various content elements or entities on a page. For instance, linking an

Organization schema to a Product schema allows search engines to understand the broader context of offerings. This explicit declaration of relationships reduces the computational effort required for Natural Language Processing (NLP).

Schema implementation is a strategic component of E-E-A-T building. E-E-A-T is a critical framework Google employs to evaluate content quality and credibility. The analysis explicitly states that schema markup “supports the same goals as E-E-A-T” by enabling content creators to “explicitly declare what your content is about, who it’s for, and who stands behind it”. This establishes a direct, tangible link between a technical implementation and a high-level quality signal prioritized by search engines and AI. The implication is that schema implementation is not merely a technical SEO checklist item; it is a strategic imperative for building brand authority and trust in the eyes of AI. By meticulously applying schema for authors, organizations, and products, brands can provide explicit, machine-readable signals of their experience, expertise, authoritativeness, and trustworthiness. This is particularly crucial as AI systems increasingly prioritize highly credible sources for their generative answers. Therefore, schema implementation should be deeply integrated into a holistic E-E-A-T strategy, ensuring that the structured data consistently reinforces the human-perceptible quality signals presented on the page, leading to higher citation rates and greater AI trust.

Table: Key Schema Types for Generative AI Visibility

This table serves as a concise, actionable reference guide for marketers and content creators. It distills complex information from various sources into an easily digestible format, enabling quick understanding and practical application of the most impactful schema types for enhancing AI visibility. By outlining the specific benefits for AI comprehension, it helps prioritize implementation efforts for maximum Generative Engine Optimization (GEO) impact.

| Schema Type | Description | Primary Benefits for AI Comprehension/Visibility | Example Use Case |

| Article | Defines content as a news article, blog post, or scholarly article. | Helps AI recognize content as informational, suitable for summaries and direct answers. | Blog posts, news articles, research papers. |

| FAQPage | Marks up a page containing a list of questions and their answers. | Increases chances of FAQs being pulled directly into AI-generated responses, featured snippets, and “People Also Ask” sections. | Q&A sections on product pages, service pages, or dedicated FAQ pages. |

| Product | Describes a product, including its name, image, description, and offers. | Helps AI understand product details for rich results, comparisons, and direct recommendations. Essential for e-commerce. | E-commerce product pages, product listings. |

| LocalBusiness | Provides details about a local business, such as name, address, phone, and hours. | Crucial for local GEO; helps AI engines connect users to physical services, appearing in local search and voice queries. | Business location pages, contact pages. |

| HowTo | Structures step-by-step instructions for completing a task. | Facilitates AI understanding of sequential processes, making content suitable for voice-activated or visual AI results (e.g., “how-to” guides). | DIY guides, recipes, software tutorials. |

| Organization | Identifies an organization, company, or institution. | Establishes organizational credibility for E-E-A-T, helps AI attribute content to a trusted entity. | “About Us” pages, corporate profiles, company contact information. |

| Person | Defines an individual, often used for authors, experts, or public figures. | Establishes author/expert credibility for E-E-A-T, allows AI to recognize and cite authoritative voices. | Author bios on articles, expert profiles, team pages. |

| Review | Marks up a review of an item or service. | Enables AI to extract and display star ratings and review snippets, enhancing product/service trust signals. | Customer review sections on product or service pages. |

| VideoObject | Describes a video embedded on a page. | Helps AI understand video content, making it discoverable in multimodal search and AI summaries that include video references. | Pages with embedded YouTube videos, video tutorials. |

IV. Crafting Content for the AI Era

Optimizing Content for LLM Consumption: Strategies for clear structure, factual accuracy, and readability.

Large Language Models (LLMs) consistently favor content that is clearly structured, factually rich, and directly answers user questions. To maximize the likelihood of content being mentioned or cited in LLM outputs, optimizing for these preferences is crucial.

Actionable steps for enhancing content structure and quality include:

- Use Descriptive, Concise Headings: Content should be broken down into easily scannable sections using hierarchical headings (H1, H2, H3 tags) that clearly and succinctly describe the topic of each section. This practice aids AI in rapidly identifying key thematic areas.

- Incorporate Bullet Points and Numbered Lists: Key information, benefits, steps in a process, or features should be summarized using list formats. This makes facts and actionable points easily extractable by AI models.

- Create Dedicated FAQ Sections: Common user questions should be directly addressed with concise, clear answers. Using question-based subheadings (e.g., “What is keyword research?”) helps align content with typical user queries in conversational AI.

- Add Comparison Tables: Data should be presented side-by-side (e.g., product features, pricing, service comparisons) with clearly labeled column headers. This simplifies analysis for both human users and AI systems seeking comparative information.

- Ensure Factual Accuracy and Citation: All data and claims must be rigorously cross-checked with reputable sources and cited appropriately. This boosts credibility and reduces the risk of AI hallucination. Vague or unsubstantiated claims should be avoided, as AI models prioritize verifiable information.

- Optimize for Readability: Employing short sentences and paragraphs improves content flow and comprehension. Aiming for a 6th- to 8th-grade reading level ensures the content is clear and easily skimmable for a broad audience, which also aids AI processing.

The internal organization of content, through elements like headings, lists, and tables, acts as a complementary layer of implicit structuring, even beyond explicit schema. This approach aims to make the unstructured body copy semi-structured for more efficient AI processing. The implication is that content creators should adopt a mindset where their articles are not merely human-readable narratives but also machine-readable blueprints. Clear, logical internal structuring guides AI to quickly identify and extract key information, facts, and relationships. This internal organization facilitates the AI’s ability to summarize, answer direct questions, and present information in rich snippets, even without explicit schema for every granular detail. It signifies a fundamental shift in content creation, prioritizing scannability and extractability for both human and AI consumption.

Mastering Semantic SEO and User Intent: Moving beyond keywords to comprehensive topic authority and understanding the “why” behind queries.

Semantic SEO is a content strategy centered on building content around broad topics rather than isolated keywords. Its primary goal is to comprehensively answer all potential user queries related to a specific subject, thereby providing a complete information hub. This comprehensive approach enriches the user experience by providing all necessary information in one place, reducing the likelihood of users needing to navigate to different pages to find answers. Semantic search, which underpins this strategy, describes how search engines interpret the contextual meaning and underlying intent of keywords, leading to more accurate and relevant search engine results page (SERP) outcomes.

Google’s continuous evolution in semantic understanding is evident in major algorithmic updates. Hummingbird, introduced in 2013, interprets the meaning of words in a query to understand context and provide results matching the searcher’s intent. RankBrain, a machine learning system from 2015, further understands the meaning behind queries and serves related search results, also functioning as a ranking factor. BERT, launched in 2019, utilizes Natural Language Processing (NLP) technology for a deeper understanding of search queries, text interpretation, and the identification of relationships between words and phrases. These updates collectively demonstrate Google’s continuous move towards a more sophisticated, semantic understanding of user queries.

Understanding key user intent categories is crucial, as they represent different stages of the customer journey and necessitate tailored content approaches :

- Informational Intent: Users seeking to learn something, often characterized by “how,” “why,” or “what” questions.

- Navigational Intent: Users aiming to reach a specific website or page, already knowing where they want to go.

- Commercial Intent: Users researching products or services but not yet ready to purchase, engaging in comparisons or reading reviews.

- Transactional Intent: Users ready to complete a specific action, typically a purchase, sign-up, or form submission.

Strategic approaches for semantic SEO include:

- Deep User Intent Analysis: The most critical step is to thoroughly understand the intent behind a search query, anticipating all the information a user might need on a given topic.

- Comprehensive Content Outlines: Develop detailed outlines structured around main sections (H2 headings) and subsections (H3 headings). Each main section should be treated as if it were a standalone article, researched thoroughly to build topical authority.

- Semantic Keyword Optimization: Move beyond single keywords to incorporate multiple, conceptually related keywords, phrases, and Latent Semantic Indexing (LSI) keywords. This allows content to rank for a broader range of relevant queries.

- Address “People Also Ask” Queries: Actively review and incorporate answers to questions found in Google’s “People Also Ask” sections and related searches into content.

- Target Medium-Tail Keywords: Focus on keywords that are more specific than short-tail but have higher search volume than long-tail. This strategy often leads to ranking for related short and long-tail keywords due to semantic analysis.

Semantic SEO functions as a “user journey optimization” strategy. The core objective of semantic SEO is to provide comprehensive information on a topic, thereby reducing the user’s need to “hop around from one article to another”. This directly contributes to an increased average time users spend on a page , which is a positive user engagement signal for search engines. By prioritizing topical authority and comprehensive content coverage, brands can significantly enhance user satisfaction and dwell time. This holistic approach to content creation, meticulously guided by deep user intent analysis, positions content as an invaluable resource that anticipates and fulfills user needs throughout their entire information-seeking journey. Consequently, such content is more likely to be favored by AI systems, which are designed to provide complete and satisfying answers, ultimately leading to higher visibility and engagement in AI search.

Building a Robust Digital Footprint: Establishing credibility and authority for AI systems.

Large Language Models (LLMs) significantly rely on a brand’s overall online presence to assess the authority and relevance of its content. A consistent and authoritative digital footprint across various platforms increases the likelihood of a brand being cited and trusted by AI models.

Actionable steps for optimizing a brand’s digital footprint include:

- Maintain Uniform Branding: Ensure that the brand name, logo, and messaging are consistent across all digital touchpoints, including the website, social media platforms (e.g., LinkedIn, X), and online directories.

- Optimize for E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness): Actively showcase these qualities on the website by including detailed author bios, linking to credible external sources, and displaying trust signals such as SSL certificates and customer reviews.

- Regular Cross-Channel Publishing: Consistently publish blog posts, case studies, and updates on the primary website, and strategically repost or share them on relevant social media platforms like X, LinkedIn, and Facebook.

- Engage in Community Forums: Participate actively in relevant online forums, Q&A sites (e.g., Quora, Reddit), and social platforms to establish thought leadership and organically increase brand mentions.

- Digital PR and Citation Engineering: Proactively seek mentions in credible third-party domains that possess high citation counts and relevance within the industry. This includes conducting podcast interviews and publishing data-driven reports designed to generate citations for high-value queries.

- Optimize Review Platform Profiles: Ensure brand profiles on industry-specific review platforms and marketplaces (e.g., G2, Capterra, Trustpilot) are complete and optimized, as these sources are frequently cited by AI for commercial queries.

- Wikipedia Presence: Strive for accurate, well-cited mentions on Wikipedia, and if appropriate, consider creating a dedicated Wikipedia page, adhering strictly to its guidelines, as it is a highly authoritative source favored by AI.

- External Media and Listicles: Aim to be featured in industry roundups, “best of” articles, and trusted publications. Links from these external sources significantly build the perception of a brand’s authority in the eyes of AI tools.

The digital footprint serves as AI’s “reputation and authority validation.” Large Language Models do not solely assess a brand’s authority from its owned website; they aggregate signals from its entire “consistent digital footprint” across diverse platforms. This process is analogous to an AI performing a comprehensive reputation and authority check. The implication is that a brand’s online presence beyond its owned properties—including social media, industry forums, review sites, and external publications—is increasingly critical for Generative Engine Optimization. AI models leverage these varied sources to triangulate a brand’s credibility and authority. Therefore, digital PR, community engagement, and consistent branding across the entire web are no longer just supplementary brand-building activities but direct GEO strategies that profoundly influence how AI trusts, cites, and positions a brand’s content. This necessitates a more integrated, cross-functional marketing approach, breaking down traditional silos between SEO, PR, and social media teams.

Adapting to Multimodal Search: Preparing content for diverse input types (e.g., images, video).

The rapid advancement of Multimodal Large Language Models (MLLMs) means that AI can now process and understand information from various modalities beyond text, including images and video. MLLMs are capable of inferring geographic locations from images based solely on visual content, even in the absence of explicit geotags, which introduces new capabilities but also significant privacy risks. Multimodal content assets, encompassing text, video, and user-generated content, are increasingly appearing across modern AI-driven discovery paths. YouTube videos, in particular, are now frequently appearing in ChatGPT’s answers, highlighting the growing importance of video content for AI visibility. Google is also indexing Instagram posts in some instances, indicating that visual content from this platform may appear more often in AI Overviews, especially for local or consumer brands.

Actionable steps for multimodal content optimization include:

- Optimize for LLM-Parsable File Types: Prioritize content formats that LLMs can effectively parse, including HTML, PDFs, and YouTube transcripts.

- Video Optimization (YouTube): For YouTube content, ensure clear headings, descriptive metadata, and accurate transcripts are provided to influence visibility in AI tools.

- Image Optimization (Instagram & Beyond): For visual platforms like Instagram, ensure visual posts have well-optimized accompanying text, including image alt tags and captions, and that profile information is accurately optimized. Consideration should also be given to optimizing physical product packaging for AI image recognition.

The increasing ability of MLLMs to process and derive meaning from visual content for tasks like geolocation , coupled with the growing prominence of platforms like YouTube and Instagram in AI search results , clearly indicates that the scope of SEO is expanding significantly beyond traditional text-based optimization. The implication is that marketers must broaden their SEO strategies to encompass comprehensive visual and audio content optimization. This involves not only optimizing text descriptions

around images and videos but also considering the inherent information within the media itself. For example, ensuring product packaging is designed with AI visual recognition in mind could become a future GEO tactic. This trend necessitates closer collaboration between marketing teams and creative/product development teams to ensure all content formats are inherently discoverable, interpretable, and citable by evolving AI models.

Leveraging AI for Content Personalization: Tailoring experiences at scale.

AI content personalization leverages artificial intelligence to create highly customized marketing experiences at an unprecedented scale. This is achieved by analyzing extensive user data, including browsing history, purchase behavior, and demographic information. The core objective is to deliver targeted content that deeply resonates with each consumer’s unique preferences, which in turn enhances customer satisfaction and fosters stronger brand loyalty. AI systems are uniquely capable of rapidly analyzing vast datasets to uncover intricate patterns in user behavior that would be imperceptible or too time-consuming for humans to identify. AI can automate the dynamic delivery of personalized content in real-time, ensuring both relevance and immediacy for the user. This is particularly effective for websites and email campaigns where content can adapt dynamically based on user interactions.

Key benefits of AI-driven personalization include:

- Scalability: AI enables marketers to engage with millions of individual users, a feat impossible through manual means, ensuring consistent delivery of personalized experiences across diverse customer segments.

- Enhanced User Experience: Personalized content increases consumer interaction and engagement, leading to improved customer loyalty and reduced churn rates.

- Increased Conversion Rates: Tailored content, delivered by AI, can significantly boost conversion rates and sales.

- Resource Efficiency: AI reduces the need for extensive human resources in content creation and delivery, allowing marketers to focus on strategy and creativity.

- Data-Driven Decision Making: AI provides valuable insights into user behavior and campaign performance, enabling quick, informed decisions and optimizations.

- Consistent Brand Messaging: AI ensures that personalized content maintains consistent messaging aligned with brand values across all customer touchpoints.

AI enhances content personalization through several mechanisms, including predictive analytics (forecasting future user behavior), behavioral analysis (creating accurate consumer profiles), Natural Language Processing (NLP) for human-like text generation, recommendation engines, and A/B testing optimization.

V. Measuring Generative Engine Optimization (GEO) ROI

Shifting Measurement Paradigms: From clicks and rankings to citations and influence.

Measuring the Return on Investment (ROI) from Generative Engine Optimization (GEO) necessitates a distinct approach compared to traditional SEO, primarily due to the evolving ways users consume information on AI platforms. The focus is shifting from conventional metrics like click-through rates (CTRs) and page rankings, which are no longer the sole indicators of success. Instead, GEO ROI demands a deeper understanding of how users interact with generative search platforms such as ChatGPT, Perplexity, and Google’s AI Overview.

The critical metrics for tracking GEO performance and revenue impact, with a strong emphasis on citation-based performance, include:

- Citation Frequency: This serves as the primary visibility indicator across AI platforms. It involves tracking monthly mentions in responses from ChatGPT, Perplexity, Google AI Overview snippets, and Claude answers. This tracking helps establish baseline performance before implementing optimization strategies and identifies growth trends. Increased citation volume directly contributes to brand awareness growth.

- Source Attribution Quality: In AI search environments, the quality of source attribution holds greater significance than mere quantity. Being referenced as the primary source for authoritative responses carries substantially more weight than multiple peripheral mentions in less relevant contexts. Citation context analysis provides qualitative insights into how AI platforms position a brand’s expertise, allowing monitoring of whether content is cited for basic information or for complex, high-value topics that demonstrate thought leadership and subject matter authority.

- Response Ranking within AI Answers: The position of content within AI answers indicates its authority level. Tracking whether content appears first, second, or third among cited sources is important, as position significantly impacts user perception and the likelihood of further brand engagement.

Additional contextual metrics include response context (topic relevance scoring) to understand market positioning and competitive displacement (share of voice changes) to indicate market share gains.

The shift from keyword analysis to contextual understanding for user intent is a key driver of these new measurement paradigms. Traditional keyword analysis is often too simplistic, as the same keywords can signal different intents depending on context. AI models, such as IntentGPT, analyze the entire webpage—its content, structure, and user engagement patterns—to identify signals of genuine user intent, moving beyond broad keyword matching to deep contextual understanding. This allows for more precise targeting and messaging, which in turn influences citation quality and engagement within AI responses.

Key Performance Indicators (KPIs) for Generative AI Success: User engagement, latency, and throughput.

Measuring the success of generative AI initiatives extends beyond traditional marketing metrics to encompass operational and user-centric KPIs.

- Operational and System Performance Metrics:

- Uptime: The percentage of time a system is available and operational, indicating reliability.

- Error Rate: The percentage of requests resulting in errors, providing insights into system issues like quotas, capacity, or data validation.

- Model Latency: The time taken for an AI model to process a request and generate a response, crucial for identifying subpar user experiences and hardware upgrade needs.

- Retrieval Latency: The time required to process a request, retrieve additional data, and return a response, vital for real-time data applications.

- Request Throughput: The volume of requests a system can handle per unit of time, indicating the need for burst capacity.

- Token Throughput: The volume of tokens an AI platform processes per unit of time, critical for ensuring appropriate sizing and usage, especially with larger context windows.

- Serving Nodes: The number of infrastructure nodes handling requests, used to monitor capacity and ensure adequate resources.

- GPU/TPU Accelerator Utilization: Measures the active engagement of specialized hardware, crucial for identifying bottlenecks, optimizing resource allocation, and controlling costs.

- User Engagement and Satisfaction Metrics:

- Click-Through Rate (CTR): Measures how many users click on content after seeing it, indicating relevance. While overall clicks may be stable, click quality is increasing, as AI responses provide an overview, and users click to dive deeper.

- Time on Site (TOS): Measures the duration a user spends on a website or application, reflecting engagement and satisfaction. Longer TOS for media content indicates elevated engagement, while shorter TOS on product pages can signal efficient discovery.

- Adoption Rate: The percentage of active users for a new AI application, helping identify if low adoption is due to lack of awareness or performance issues.

- Frequency of Use: How often a user sends queries to a model, providing insight into application usefulness.

- Session Length / Queries per Session: Average duration of user interaction and number of queries, shedding light on entertainment value or effectiveness at retrieving answers.

- Query Length: Average number of words or characters per query, illustrating the amount of context users submit.

- Thumbs Up/Down Feedback: Measures user satisfaction, providing human feedback to refine future model responses.

The evolution of LLM architectures towards learning and search significantly impacts these KPIs. Recent advancements in reasoning LLMs, such as OpenAI’s O3, demonstrate the power of combining learning and search to achieve superhuman performance. This moves beyond traditional LLMs that “blurt out the first thing that comes to mind” to models that can “think harder” by breaking down complex problems into smaller steps, outlining, planning, backtracking, and self-evaluating. This integration of training and inference through reinforcement learning and chain-of-thought reasoning allows for more robust and accurate problem-solving, directly influencing the quality, relevance, and efficiency of AI-generated responses, which in turn impacts user engagement and satisfaction metrics.

Analyzing AI-Driven Traffic: Utilizing Google Analytics and specialized tools.

Analyzing AI-driven traffic in Google Analytics (GA) requires configuring custom channel groupings for AI search engines. This process ensures traffic is attributed effectively for reporting. Key traffic parameters in GA include Source (origin of traffic), Medium (type of channel), and Referrer (exact URL from which visitors came). Many AI search engines do not follow a consistent attribution pattern; some AI Overview features appear as organic search traffic, while others, like Microsoft Copilot, appear as direct traffic. However, platforms like Perplexity, ChatGPT, and Gemini often pass referrer information that can be used for attribution.

To configure GA for custom channel groupings:

- Navigate to the GA property admin section and access channel group settings.

- Create a new channel group or add to an existing one.

- Add a new channel specifically for AI search engine traffic.

- Define channel conditions using regex for Source (e.g., .*[Pp]erplexity(?:\.ai)?|.*[Cc]hatgpt(?:\.com)?|.*[Gg]emini(?:\.google\.com)?|.*exa\.ai.*|.*iask\.ai.*) and Medium (referral|\(not set\)) to capture various AI sources.

- Crucially, reorder the new AI search engine channel to be ahead of the Referral channel, as GA evaluates conditions based on order.

- Save the changes.

After configuration, data points can be compared across channels to determine the value of AI traffic to business outcomes and identify top-performing pages. While AI-driven traffic may not be the leading channel in terms of users and sessions initially, its trends are expected to increase over time, potentially impacting paid and organic search traffic.

Specialized AI search visibility tracking tools are emerging to address the complexities of measuring GEO performance. Tools like Rankability’s AI Analyzer, Peec AI, LLMrefs, Scrunch AI, and Profound offer capabilities such as prompt-level testing, competitive citation comparison, share-of-voice analysis, sentiment tracking, and identification of content gaps. These tools are designed to track how brands surface across various AI engines (ChatGPT, Perplexity, Google AI Overviews, Claude, Gemini) and provide actionable insights into citation counts, market share, and brand perception within AI-generated answers. Some platforms, like Scrunch AI, are evolving to create machine-readable content layers for AI crawlers and offer optimization workflows that have delivered significant traffic increases and visibility improvements.

The need for holistic AI content audits is becoming increasingly apparent. Human auditors can miss errors and struggle with scale, making AI tools invaluable for automating content inventory, analyzing performance metrics (page views, bounce rates, social shares, keyword rankings, broken links, internal links, leads, conversions), and identifying content gaps or opportunities. This allows for faster, more accurate audits and improved insights, enabling businesses to produce higher-quality content and test responses to different content types. Integrating these automated tools with GA provides a comprehensive view of content performance across both traditional and AI-driven search environments.

Case Studies and Measurable Results: Demonstrating GEO’s impact.

Generative Engine Optimization (GEO) is demonstrating tangible results for businesses adapting their content strategies. Success stories highlight how GEO can drive significant improvements in visibility, traffic quality, and conversion rates, moving beyond traditional SEO metrics.

- B2B Software Company: A major B2B software company partnered with a leading GEO agency, seeking direct revenue impact from generative AI optimization. Within six months, their targeted GEO campaign achieved an 8% conversion rate from AI-engine traffic, successfully converting 150 clicks into qualified pipeline leads. This conversion rate notably exceeded their traditional search benchmarks, underscoring the quality advantage of AI-driven traffic.

- Healthline (Medical Content Publisher): Healthline aimed to enhance its content’s visibility and authority within AI-generated responses. By restructuring their medical content to be more “AI-readable” and actively tracking citations across multiple AI platforms, they achieved a 218% increase in AI citations and a 43% rise in qualified traffic from generative search platforms within one year. This success was attributed to treating AI citations as a leading indicator of broader content performance.

- HubSpot: HubSpot focused on connecting AI citations directly to pipeline metrics. By leveraging their data-rich quarterly “State of Marketing” reports and focusing on generating AI citations for their 2025 report, they achieved 15,000+ AI citations. This led to a 76% year-over-year increase in referral traffic from AI platforms and a measurable lift in product demo sign-ups.

- 42DM: This case study exemplifies an integrated approach where GEO strategies influenced traditional SEO metrics. Their comprehensive GEO program delivered a 491% increase in monthly organic clicks and secured 1,407 keywords in the top-10 positions. This significant improvement in traditional metrics was a direct result of GEO strategies that enhanced overall content quality and authority.

- Rocky Brands: This footwear retail company focused on creating SEO-friendly content to boost organic search revenue. Following the implementation of SEO software from BrightEdge, Rocky Brands saw a 30% increase in search revenue, a 74% year-over-year (YoY) revenue growth, and a 13% increase in new users.

- Fugue: As a cloud infrastructure security vendor, Fugue faced high competition. By using Frase.io to optimize their Cloud Security Posture Management (CSPM) page, Fugue’s CSPM page rank improved from 10th position to 1st place.

- STACK Media: This content delivery website for athletes partnered with BrightEdge to increase web traffic. As a result, STACK Media’s website visits increased by 61%, and the average bounce rate per page reduced by 73%.

These examples underscore the practicality of measuring Return on Generative Engine Optimization (RoGEO) through citation and influence metrics. Traditional SEO metrics like clicks and rankings are often insufficient for AI search. RoGEO focuses on citation frequency, source attribution quality, and response ranking within AI answers. This requires specialized monitoring tools and advanced attribution modeling to connect AI search citations to conversions and revenue, demonstrating the value of brand awareness and consideration influence throughout the customer journey.

It is important to note that relying solely on AI-generated content can have detrimental effects. A case study by Civille demonstrated that a website relying exclusively on AI-generated content for six months experienced a dramatic traffic drop from approximately 1,600 views to just 350 views, with keyword rankings following a similar downward trend. This indicates that Google values original, high-quality content, and a sudden influx of generic, AI-written posts can negatively impact search rankings. Google may detect AI-heavy sites through volume anomalies (sudden surge in posts), prompt simplicity (predictable patterns), and a missing unique value proposition (lack of unique tone or insights). This emphasizes the necessity of a balanced human-AI content strategy. The optimal approach involves using AI for tasks like keyword research and outlines, then enriching content with human expertise, multi-tiered prompting, and rigorous proofreading, maintaining a balance between AI efficiency and human quality.

VI. Navigating Challenges and Ethical Considerations

Mitigating AI Hallucinations: Strategies for ensuring factual accuracy and reliability.

AI hallucinations occur when an artificial intelligence system generates outputs that appear plausible but are factually incorrect or fabricated, often stemming from misperceived or nonexistent patterns in the data it processes. This phenomenon, which typically arises in machine learning models making confident predictions based on flawed or insufficient training data, can range from amusing mislabeling to serious misjudgments. Root causes include insufficient or biased training data, overfitting (where a model learns noise in training data too well), and faulty model assumptions or architecture.

Mitigating AI hallucinations is crucial for developing trustworthy and reliable AI systems. Specific strategies can reduce the chances of these systems generating misleading or false information:

- Use High-Quality Training Data: The foundation for preventing AI hallucinations lies in using high-quality, diverse, and comprehensive training data. This involves curating datasets that accurately represent the real world, covering various scenarios and edge cases, and ensuring the data is free from biases and errors. Regular updates and expansions of datasets help AI adapt to new information and reduce inaccuracies.

- Use Data Templates: Data templates can serve as structured guides for AI responses, ensuring consistency and accuracy. By defining templates that outline the format and permissible range of responses, AI systems can be restricted from fabricating information, especially in applications requiring specific formats.

- Restrict Data Set: Limiting the dataset to reliable and verified sources prevents AI from learning from misleading or incorrect information. This involves carefully selecting data from authoritative and credible sources and excluding content known to contain falsehoods or speculative information.

- Be Specific with Prompting: Crafting prompts with specificity drastically reduces the likelihood of AI hallucinations. This means providing clear, detailed instructions that guide the AI towards desired output, specifying context, desired details, and citing sources.

- Default to Human Fact-Checking: Incorporating a human review layer remains one of the most effective safeguards. Human fact-checkers can identify and correct inaccuracies AI may not recognize, providing an essential check on the system’s output and improving AI performance over time.

The importance of multi-agent orchestration for hallucination mitigation is gaining traction. Hallucinations remain a significant challenge, undermining trust in AI systems. Orchestrating multiple specialized AI agents, leveraging Natural Language Processing (NLP)-based interfaces like the Open Voice Network (OVON) framework, can systematically review and refine outputs. This multi-layered approach, where agents communicate assessments of hallucination likelihood and reasons through structured JSON messages, leads to significant reductions in factual errors and clearer demarcation of speculative content. This approach bolsters trust and explainability by providing precise, natural language-based, and machine-readable insights into concerns, the nature of fictional content, and the logic behind identifying them as such, allowing subsequent agents to make more informed and targeted refinements.

Addressing Algorithmic Bias: Ensuring fairness and equity in AI-generated content.

Algorithmic bias describes systematic and repeatable errors that result in unfair outcomes, often privileging one group of users over others. Beyond data processing, bias can emerge from design, where algorithms determining resource allocation may inadvertently discriminate. Algorithms may also exhibit an uncertainty bias, offering more confident assessments when larger datasets are available, potentially disregarding data from underrepresented populations.

Specific types of machine learning bias in LLMs include:

- Selection Bias: The inherent tendency of LLMs to favor certain option identifiers regardless of content, often due to token bias (assigning higher probability to specific answer tokens). This can cause performance fluctuations when option ordering is altered.

- Gender Bias: The tendency of models to produce outputs unfairly prejudiced towards one gender, often stemming from training data. For example, associating nurses with women and engineers with men.

- Political Bias: The tendency of algorithms to systematically favor certain political viewpoints or ideologies, influenced by the prevalence of such views in training data.

- Racial Bias: The tendency of models to produce outcomes that unfairly discriminate against or stereotype individuals based on race or ethnicity, often due to training data reflecting historical inequalities. This can manifest in misidentification by facial recognition systems or underestimation of medical needs for minority patients.

- Emergent Bias: Results from the use and reliance on algorithms in new or unanticipated contexts, where models may not have adjusted to new knowledge or shifting cultural norms, potentially excluding groups.

A critical concern is the potential for “AI-AI bias,” where LLM assistants in decision-making roles implicitly favor LLM-based AI agents and LLM-assisted humans over ordinary humans as trade partners or service providers. Experiments show LLMs consistently prefer LLM-presented items more frequently than humans do. This “gate tax” (price of frontier LLM access) could exacerbate the digital divide and potentially marginalize human economic agents.

Addressing algorithmic bias requires careful examination of training data, improved transparency in algorithmic processes, and efforts to ensure fairness throughout the AI development lifecycle. Organizations should identify a cross-functional audit team (including compliance, HR, IT, legal) to conduct AI use mapping, assess potential bias (including evaluating training data representativeness and disparate impact), maintain transparency and documentation, and review vendor contracts for liability and data security provisions. Training for individuals involved in AI processes should cover bias recognition and appropriate use cases.

Data Privacy and Security in Generative AI: Safeguarding sensitive information.

Generative AI significantly alters data privacy expectations due to its reliance on massive datasets, which frequently include personal, sensitive, or confidential information. The speed and scale at which these tools operate have reshaped what individuals, organizations, and regulators expect regarding data privacy and security, making privacy a proactive and strategic imperative.

Key areas of concern regarding data privacy in generative AI include:

- Data Leakage: Generative AI models have the potential to unintentionally memorize and repeat sensitive data. This risk is particularly heightened when these models are fine-tuned using proprietary or user-submitted content, leading to inadvertent regurgitation of personal data.

- Unauthorized Data Use: A significant concern arises if the data used to train an AI model was collected without explicit consent for that specific purpose. This can lead to privacy violations and regulatory non-compliance, a phenomenon referred to as “purpose drift,” where data collected for one reason is used in entirely different ways through generative models.

- Generation of Sensitive Content: Some generative AI tools are capable of creating outputs that contain personal, discriminatory, or misleading information, whether intentionally or unintentionally. This triggers significant ethical and legal red flags.

Beyond these specific concerns, generative AI systems often retain information in ways that make it difficult to trace or erase, further complicating data privacy management.

To mitigate these risks and maintain trust, organizations are urged to integrate privacy by design principles, conduct AI-specific Data Protection Impact Assessments (DPIAs) for high-risk applications, and ensure transparency in how AI systems utilize data. Best practices for LLM security include:

- Encrypt Data In Transit and at Rest: Using secure protocols like HTTPS and SSL/TLS for data in transit, and ensuring stored data is inaccessible without proper decryption keys for data at rest.

- Implement Strict Access Controls: Managing who can view or use data within LLMs through multi-factor authentication (MFA) and role-based access control (RBAC). Maintaining stringent access logs and monitoring access patterns helps detect abnormal behavior.

- Anonymize Data During Training: Minimizing privacy risks by anonymizing data (e.g., data masking or pseudonymization) during LLM training to protect individual identities and prevent reverse-engineering.

- Manage and Control Training Data Sources: Ensuring authenticity, accuracy, and security of training data by sourcing from reliable origins, verifying sources, and continuously monitoring for inconsistencies. This includes controlling access and maintaining secure backups.

- Develop and Maintain an Effective Incident Response Plan: Crucial for promptly addressing security breaches, including procedures for assessment, containment, mitigation, and communication with stakeholders. Regular training and drills ensure preparedness.

Developers play a unique role in ensuring privacy is engineered into AI systems from the ground up, prioritizing data minimization, using synthetic or anonymized data, and carefully documenting model behavior. User awareness and caution when inputting sensitive information into generative AI tools are also vital.

VII. Future Trends and Strategic Adaptation

Emerging Trends in LLM Optimization: Efficiency, specialization, and multimodal capabilities.

The landscape of Large Language Models (LLMs) is continuously evolving, with significant trends pointing towards enhanced efficiency, greater specialization, and expanded multimodal capabilities.

- Efficiency and Sustainability: A compelling trend is the drive toward creating smaller, more efficient LLMs. Current LLMs consume vast amounts of energy and computational resources, leading to a push for “Green AI.” Projections suggest data center power demand could increase significantly by 2030, making efficiency not just a cost issue but also an environmental imperative. Innovative startups are demonstrating that models with comparable performance can be built at a fraction of the cost, with inference costs dropping by an order of magnitude each year. This enables the development of powerful, cost-effective, and environmentally friendly LLM-powered applications.

- Specialization and Customization: As industries mature in their adoption of AI, there is a growing demand for LLMs tailored to specific applications. Businesses are moving beyond general-purpose models like GPT-4 to domain-specific LLMs, which can be fine-tuned with proprietary data to enhance accuracy, compliance, and efficiency in tasks such as financial forecasting, fraud detection, and personalized healthcare diagnostics. This customization also aims to improve the end-user experience, with companies offering APIs and fine-tuning services that allow organizations to “own” an LLM that understands their specific language and context, leading to more human-like interactions and greater trust in AI systems.

- Multimodal Capabilities: The rapid advancement of Multimodal Large Language Models (MLLMs) is significantly enhancing their reasoning capabilities, allowing them to integrate and understand information from various modalities beyond text, including images and video. MLLMs can infer geographic locations from images based solely on visual content, even without explicit geotags, posing both significant potential and privacy risks. This capability means that multimodal content assets (text, video, user-generated content) are increasingly appearing across modern AI-driven discovery paths.

The evolution of LLM architectures towards learning and search is a foundational advancement. Recent progress in reasoning LLMs, such as OpenAI’s O3, demonstrates the power of combining learning and search to achieve superhuman performance. This moves beyond traditional LLMs that often “blurt out the first thing that comes to mind” to models that can “think harder” by breaking down complex problems into smaller steps, outlining, planning, backtracking, and self-evaluating. This integration of training and inference through reinforcement learning and chain-of-thought reasoning allows for more robust and accurate problem-solving, which will further fuel the trends of efficiency and specialization.

The Future of SEO in the Generative AI Era: Adaptation and continuous learning.

The future of SEO in the generative AI era is characterized by a rapidly evolving definition of “search” and the imperative for marketers to adapt to these changes. Generative AI is fundamentally reshaping how people discover, evaluate, and act on information online, meaning the traditional understanding of search is changing in real-time. The way search platforms function is no longer solely about retrieval; it involves expanding queries, extracting passages, and synthesizing information. Data suggests that being in the top 10 of the SERP provides a 25% chance of appearing in an AI overview, indicating a need for different strategies to increase visibility in these new formats.

While traditional SEO methods may still yield some results, they are no longer sufficient for effectiveness in the evolving channels; thus, SEO is considered “deprecated” in its old form. The pace of change in SEO is unprecedented, requiring marketers to continuously adapt. This necessitates a critical need for skill retraining and the acquisition of new skills, including understanding concepts like “query fan out” (a new term for query expansion with deeper technical implications) and how to utilize information in a content context. SEO professionals must now interface with various disciplines beyond just text on webpages, including video and the broader content ecosystem, requiring collaboration with teams like social media and legal departments.

The high value associated with AI presents an opportunity for SEO professionals to reframe their role, potentially leading to increased budgets and salaries, as AI is a topic that captures the attention of executive leadership. However, tracking performance in the AI era is complex, as traditional metrics like clicks are diminishing. Marketers need to consider tracking mentions, citations, and whether their phrases are used in AI answers, as there is no direct “Google Search Console for GPT”. This makes clickstream data crucial for short-term measurement.

Ethical considerations are also paramount. Marketers must consider how brands might be misrepresented by AI based on user-generated content or even malicious poisoning of LLM outputs. This necessitates a holistic SEO perspective that considers known, latent, shadow, and AI-narrated brand aspects.

Practical tips for adaptation include:

- Technical Check: Disable JavaScript on your website to ensure essential information remains accessible, as some LLM crawlers may be inefficient with JavaScript.

- Feedback to LLMs: Provide feedback (e.g., using thumbs down) when LLM outputs for your brand are inaccurate or undesirable.

- Social Listening: Use tools like Perplexity.ai to check what people are saying about your brand on social platforms like Reddit, as this content can be consumed by LLMs and influence brand perception.

- Experiment with Models: Use major AI models (preferably with a paid account) to run queries related to your company daily, weekly, or monthly, tracking results to understand how your brand appears.

- Embrace Omnichannel Content: Think beyond your website and consider your content strategy across the entire ecosystem, including platforms like Reddit, YouTube, and LinkedIn Pulse, as these can influence AI overviews.

- Understand Core Concepts: Learn the nuances of new concepts like “vector embedding,” as everything in this space operates on such principles.

The industry is currently in a turbulent phase, with many new tools emerging and a likely future of consolidation. However, for those willing to adapt and learn, there is significant opportunity.

VIII. Conclusions and Recommendations

The shift from traditional SEO to Generative Engine Optimization (GEO) is not merely an evolution but a fundamental redefinition of how digital content achieves visibility and influence. The diminishing reliance on direct clicks in favor of AI-generated answers and summaries necessitates a strategic reorientation for all digital marketers.

To thrive in this AI-driven search landscape, the following conclusions and recommendations are paramount:

- Prioritize Structured Data as Foundational Infrastructure: Schema Markup, particularly in JSON-LD format, is no longer an optional enhancement but a critical enabler for AI comprehension and content surfacing. Organizations should conduct a comprehensive audit of their existing content to identify opportunities for implementing and enhancing schema, focusing on types like Article, FAQ, Product, Local Business, How-To, Organization, and Person. This proactive approach builds a “page-level knowledge graph” that explicitly guides AI, reducing ambiguity and increasing the likelihood of content being trusted and cited.

- Redefine Content Strategy for AI Consumption: Content must be crafted with machine readability in mind, alongside human readability. This involves adopting clear, hierarchical structuring with descriptive headings, bullet points, and comparison tables. The focus should shift from isolated keywords to comprehensive topic authority, driven by a deep understanding of user intent across all stages of the customer journey. This semantic approach ensures content provides holistic answers, anticipating user needs and fostering longer engagement, which AI systems favor.

- Cultivate a Robust and Consistent Digital Footprint: AI models validate a brand’s authority and credibility by aggregating signals from its entire online presence, not just its website. Therefore, consistent branding, active participation in community forums (e.g., Reddit, Quora), optimized profiles on review platforms, and strategic digital PR for mentions in authoritative third-party domains are crucial. This holistic approach builds a strong reputation that AI systems are more likely to trust and reference.

- Embrace Multimodal Content Optimization: With the rise of Multimodal Large Language Models (MLLMs), optimizing content extends beyond text to include images and video. Marketers must ensure that visual and audio assets are discoverable and interpretable by AI, through elements like descriptive metadata, accurate transcripts for videos, and optimized alt tags and captions for images. This expands the potential surface area for AI-driven discovery.

- Adopt New Measurement Frameworks for Generative AI ROI: Traditional metrics are insufficient. Organizations must transition to tracking citation frequency, source attribution quality, and response ranking within AI answers. This requires investing in specialized AI search visibility tracking tools and configuring Google Analytics for custom channel groupings to accurately attribute AI-driven traffic. Measurable case studies demonstrate that GEO can lead to significant increases in AI citations, qualified traffic, and conversion rates, justifying investment.

- Implement a Balanced Human-AI Content Creation Approach: Solely relying on AI-generated content can be detrimental to SEO performance. The optimal strategy involves leveraging AI for efficiency in tasks like keyword research and content outlining, but critically enriching and refining outputs with human expertise, unique insights, and brand voice. Multi-tiered prompting and rigorous human fact-checking are essential to ensure factual accuracy, authenticity, and avoid the pitfalls of generic or inaccurate AI content.

- Proactively Address Ethical and Security Considerations: As AI systems become more integral, managing risks associated with hallucinations, algorithmic bias, and data privacy is paramount for building and maintaining trust. Implementing strategies for hallucination mitigation (e.g., multi-agent orchestration, specific prompting), addressing algorithmic bias (e.g., diverse training data, human oversight), and ensuring robust data privacy (e.g., encryption, access controls, anonymization) are non-negotiable for sustainable GEO success.

- Foster a Culture of Continuous Adaptation and Learning: The AI landscape is dynamic, with trends pointing towards more efficient, specialized, and multimodal LLMs. Digital marketing teams must embrace continuous learning, adapt their skills, and stay abreast of new technologies and best practices. This agility will be key to navigating the ongoing transformation of search and securing a competitive advantage in the AI era.

By embracing these strategic imperatives, organizations can effectively navigate the complexities of the AI-driven search landscape, transforming the challenges into opportunities for enhanced visibility, deeper engagement, and measurable business growth.

ALL BLOGS

ALL BLOGS